“ASICs, magic and pro-wrestling are closely guarded secrets.”

Not so long ago you could not dream of running open source software on a 100Gbps router, but we now operate a fully open source network that can scale to billions of packets per second. Atomic built a 100Gbps IP network running Debian and drivers in the Linux kernel.

About 10 years ago a large percentage of our internet traffic was international traffic. Content was far away and connections had high latency, if you did not live in the US or EU that is.

Since about 2014 content delivery networks (CDNs) have moved content very close to the end user, usually in the same city with latency as low as 1ms. This is what makes on-demand video streaming business models like Netflix work. Local fibre to the home (FTTH) services started rolling out around the same time and resulted in rapidly increasing traffic volumes.

Modern ISP networks need to provide a thin and efficient layer between the content networks and the customer. This involves high speed routing and ideally packets should only pass through a single high speed router connecting the content network to the fibre network.

Software vs Hardware Routing

Atomic Access was started in 2018 as a fibre-only ISP, initially doing software routing, using Debian 9 and FRR version 3 on XeonD CPUs and Intel x710 network cards. This was fully open source and worked well for a while. Our only real issues was port density, using 4 port SFP+ NICs. As our customer numbers grew we needed to scale traffic volumes and needed to route at hardware (ASIC) speeds.

Hardware routing with ASICs (Application Specific Integrated Circuit) is orders of magnitude faster than software routing with general-purpose CPUs. A fairly modern Linux server can probably route about 8 Gbps of traffic before it starts running into software interrupt limitations. Compare that to modern ASICs that can route 50 Tbps. Gigabit vs Terabit speeds, or 1000x faster. ASIC routing also delivers more consistent latency (less jitter).

It’s a fairly big leap moving from software to hardware routing, a big leap in cost and operational thinking. Having a software forwarding plane (logic to forward packets) in the OS is different to having an isolated and specialised hardware forwarding plane which drives the front panel ports of the router. You could still route packets between front panel ports and the operating system over a PCI bus, but the packet rate would probably kill the CPU.

With hardware routing you can’t just run ‘tcpdump’ and expect to see packets flowing through the OS. It would be very convenient if CPUs could route billions of packets per second with consistent latency, but that’s simply not realistic and at some point you need to start using ASICs.

We could have chosen to buy routers from the usual big name companies like Cisco, Arista or Juniper, but because they force you into expensive long term support contracts we struggled to buy into their ecosystems.

Even with the expensive support contracts, networking vendors can be slow to resolve software bugs. We’ve seen our upstream providers and fibre networks run into this problem a few times.

You can’t have customers on unstable connections while the router vendor takes weeks to fix a software bug and create a new OS release. You do not want to be dependent on a single vendor, their troubleshooting, and their process for updating software. This is often referred to as single vendor lock-in.

To get around the single vendor problem ISPs often have a multi-vendor strategy, buying multiple router models from multiple vendors. This can get expensive and you end up having two software ecosystems to manage. The result is that only bigger ISPs have hardware speed routing and there is usually no easy, gradual or cost effective upgrade path between software routing and hardware routing.

Looking at NAPAfrica (local internet exchange), about 50% of local ISPs are using software routing (Routerboard icon in BGP.tools) and about 50% are using big name vendor hardware routing. Of the big name vendor ISPs, we suspect many are using grey market equipment – 2nd hand or refurbished, to avoid the expensive support contracts. A small percentage will have a multi-vendor strategy with active support contracts.

Many big brand ISPs outsource their networks because the equipment is expensive and complex to operate. We estimate around 45% of local fibre ISP customers are on outsourced networks. Some ISPs just want to be brands – marketing and billing companies with support chat bots. The ISP staff often have no insight into the outsourced IP network, which is concerning when this is the primary function the customer is paying for.

ISPs basically have 3 options:

1) Buy new router hardware, with expensive support contracts, from more than one vendor

2) Buy refurbished router hardware, without support contracts

3) Buy inexpensive routers which do (mostly) software routing

In all these cases you are locked into proprietary software and varying levels of software instability.

Imagine being a coder without a community of developers on Stack Overflow. Imagine not being able to look at the source code or logs when troubleshooting – and paying a lot of money to be in this position.

Let’s run Debian on a 100Gbps Router

Atomic chose to do things differently. We believe in open networking, open source and open standards. We want to build and operate our own network and we want full operational control and visibility. We chose to go looking for a purist open networking solution.

Around 2019 we started following the Open Networking trend and experimented with a few devices, but they usually had closed software and ‘black box’ ASIC management. You could buy commercial 3rd party network operating systems (NOS) to run on these devices, but they were closed source and not very reliable.

For a while we considered buying multi core Xeon servers and using accelerated software forwarding planes like DPDK + VPP, but you still run into port density challenges and having a software forwarding plane outside the Linux OS was not easy to manage.

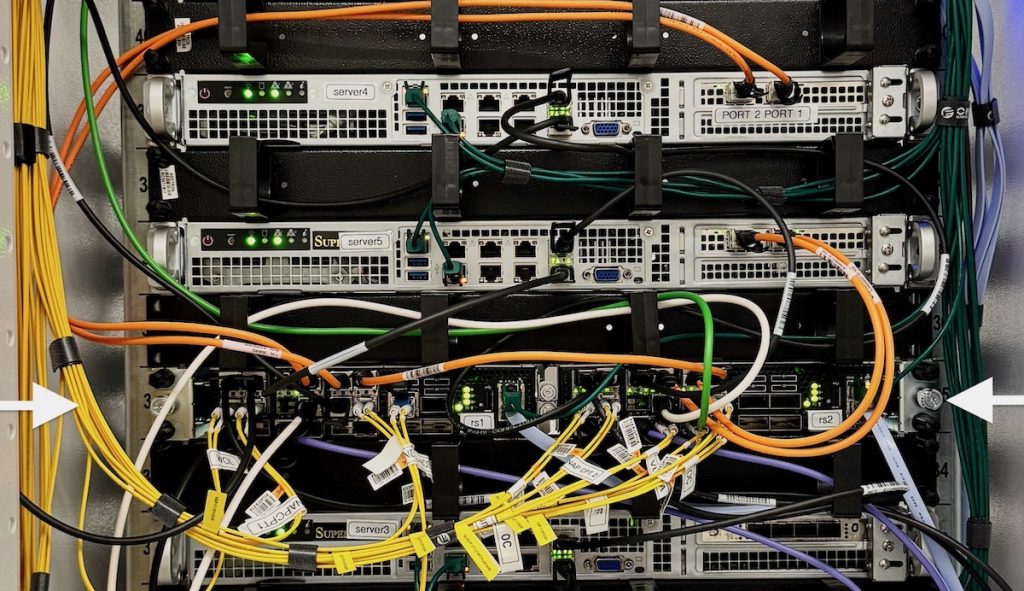

We moved away from buying more Intel NICs and started using Mellanox NICs. Then we discovered a fully open option with Mellanox Spectrum ASICs and the Switchdev project. Switchdev and the mlxsw driver are in the standard Linux kernel. We started using two Mellanox SN2010 switches. They had good port density, low power needs and are compact enough to have 2 switches side by side in one rack unit.

It took some time to get everything working, compiling our own kernels and building Debian packages, but we ended up with a very flexible and modular solution. Fully open source, so we can swap out software as needed. We are now running Debian 12 and FRR version 10.2 (in late 2024). It also means Atomic has ownership of all parts and the improved visibility that comes from being able to see under the hood, so to speak.

We’ve proven that it is indeed possible to route at 100 Gbps speeds with just Linux. Our peering and content delivery can now scale to billions of packets per second – way more than we need.

Open Networking gives us hardware speed routing with a familiar management interface, without the vendor lock-in and related high costs. Atomic’s business and home customers now get ASIC speeds and consistent low latency routing.

The SN2000 series switches we use are often used in high-frequency trading (HFT) networks which require ultra low consistent latency. 300ns latency is about as low as you get for a full featured IP routing switch. A nanosecond (ns) is one-billionth of a second.

Our network is fully dual-stack with IPv6 services available on all our fibre networks. We now see 50% less latency for packets crossing the Cape Town peering points with 80% less jitter.

It took 25 years between the initial Linux release and the Switchdev Spectrum driver going into the kernel (1991-2016). Linux nerds are purist and this is as open and purist as high speed networking gets.

Thank You to the Pioneers

A big thank you to the people at Mellanox and Cumulus – now part of Nvidia, and the Nvidia developers. They pioneered projects like ONIE and Switchdev and had the vision to make open networking possible.

Why is Open Networking good for customers?

- Cost saving – the end customer does not end up paying for expensive equipment

- Allows us to do peering and BNG (broadband network gateway) features on the same device, no need for multi-tier network designs with extra latency

- Allows us to do BNG services at ASIC speeds, no need for software BNGs (usually PPPoE)

- Avoid the BNG licencing from big name vendors, usually very expensive

- Consistent low latency, as low as 300ns between content and fibre networks

- Stable and reliable, no surprises, less downtime

- Non-outsourced network with full ownership of all parts – no ‘passing the buck’ with the ISP saying: we are waiting for the vendor to fix something

Why is Open Networking good for an ISP?

- Linux everywhere, 100% Open Source

- Cost saving, no expensive support contracts

- Full control over the network

- Avoid vendor lock-in, proprietary software and slow bug fixes

- Familiar management, we’ve been working with Linux for over 25 years

- Open troubleshooting, not at the mercy of the vendor

- Visibility, can see under the hood, add custom monitoring and telemetry

- Flexibility, modular, swap out software as needed

- Many great Open Source projects and add-ons to choose from

- Being part of the Open Source and Open Networking community

- Faster rate of software upgrades and innovation

What are the specs of the SN2010?

- 57 Watt power

- x86 CPU, ATOM dual core

- 8G memory

- 256G storage (we did a DIY SSD upgrade)

- You can reverse the fan direction if needed

- 256k ASIC entries: 210k IPv4, 30k IPv6, 8k MACs

- 10 / 25 / 40 / 100 Gbps port speeds

- 1.3 Bpps

- 16MB buffers

- 300ns latency

Keep reading

- The mlxsw project (Github)

- A similar article with the SN2700 (more technical)

- The world in which IPv6 was a good design (software vs hardware networking)

- More about Atomic’s Network

- A version of this article was published on TechCentral

- How Yandex uses SwitchDev